Another donation

21 Oct 2020We have just received another donation of ~€100, the donor asked not to disclose his identity.

We have just received another donation of ~€100, the donor asked not to disclose his identity.

Since the last status update (that is, within the last month) the following language features have been implemented:

* All operations and relations on basic types (save reals)

* The relation IN for integers and sets

* Global RECORD variables

* Global ARRAY variables - thei required adjustment of the addressing mode within the backend

* Compilation of a global proper PROCEDURE body

* Local procedure variable addressing

* Value parameters of basic types (save reals)

* Variable parameters of basic types (save reals)

* Value and variable parameters of structured types, including open and fixed arrays

* Standard procedure LEN applied to global and local arrays

* Procedure call statement

* All of the above covered with tests

I am now looking at implementing strings, [ccall] calling convention and some sort of static linking (into a host OS executable). These three features will make it possible to compile a “Hello world” program.

When that milestone is achieved, I will need to implement IF and WHILE statements, and from then on it would be possible to develop a proto-kernel for BlackBox/64 alongside with Herschel. In greater detail:

As the development of Herschel continues, it will become necessary to implement features dealing with dynamic memory. Testing these features requires a run-time environment. Normally, this environment is provided by the BB Kernel. But it is not available for 64bits! Instead of developing a special testing env for the purposes of Herschel testing and development, I am looking at starting with a proto-kernel and gradually developing it into a full-featured 64-bit BB kernel.

The first and most essential features to put in the proto-kernel are, well, trap handling and trap reporting. You do expect Herschel-compiled programs to crash while Herschel is under development, and post-mortem analysis is critical to removing errors in Herschel. So, there is a need for [ccall] support in order to make host OS calls to output to the console and to set up trap handling and signal callbacks. (to handle segmentation violation).

Once console output and trap/signal handling is implemented, the memory allocator (procedure NEW implementation) can be put into the proto-kernel. It will require libc’s malloc calls and maybe some others; most other processing is done within the kernel with a limited number of CP features. (Note that the garbage collector doesn’t need to be ported from 32bit kernel until the whole Herschel is finished.)

Thus, with a proto-kernel as described it will be possible to continue the testing and development of Herschel without the extra work of creating a special testing environment. Eventually, the proto-kernel will become the new BB 64-bit kernel by adding to it all the features that the kernel provides.

AS of today, September 9th:

* The code generator is up and running

* Global variables can be addressed

* Module body (BEGIN section of a module) can be compiled

* The assignment operator works (for a limited number of basic types)

* The basic types that are implemented are: BYTE SHORTINT INTEGER LONGINT CHAR SHORTCHAR BOOLEAN SET

* Assignments of the form var := literal/var are implemented for the aforementioned types

* Safe type casts with CHR ORD and unsafe casts SYSTEM.VAL are implemented for the aforementioned types

* All of the above features coverd with tests

* Two kinds of standards (correct test responses) for the code generator are produced: the actual generated machine text standards and the field test standards. A field test standard is the values of global variables that the generated code ‘leaves’.

* A testing infrastructure for the code generator is implemented

* The CG tester consisting of an HTTP server receiving requests and a primitive loader actually executing generated 64-bit code is implemented in A2 - the Oberon-based active objects operating system

* The testing panel (front-end) in BB has been updated to accomodate now both front-end and back-end tests and standards.

* The test base now has about 100 test.

As I have mentioned before, I make amendments to the compiler ‘vertically’, not ‘horizontally’. By this I mean the following: I set a task - for instance, to compile the assignment of the form y(LONGINT) := x(INTEGER) - and then I amend all procedures in all modules of the compiler that relate to this task. And even within individual procedures (which are sometimes sizy) I only amend the branches that relate to my task (commenting the other branches out, or, better yet, protecting them with HALT(126) not yet implemented).

Doing it ‘horizontally’ would mean that I need to take, say, a procedure that is responsible for type conversions, and amending it all at once - that is, all possible type combinations. Since I’m a bear of little brain, and just learning the CP2 compiler, it would be quite a difficult and overwhelming job. Besides, it would be harder to test.

Besides, the vertical approach is a shorter path to intermediary results and making myself happy; it also allows to check hypothesis quickly (hypothesis like, Will such and such opcode do the job that I need done) and identify pitfalls early (for instance, turned out that opcode A2 MOV AX, mem cannot be used any more - or is inconvenient, and it had to be replaced.

But the vertical approach also has a price tag: it means I cannot “complete” a procedure: make all amendments it needs, debug it, test it out sufficiently and forget about it. As I choose new tasks, I go back to procedures I have amended before and amend them again - I unfold or uncomment branches, sometimes even change the branching logic. This implies the risk of breaking what had been done before.

I faced the same issue - and solved it efficiently - when I was doing the front-end of Herschel. And solved it with a testing infrastructure and a set of tests.

For each task that is set and solved I also form a standard - that is the result expected from the compiler for the test relating to the task. The task itself is also formulated as a module. This module is passed as input to the compiler, and then the output of the compiler is compared with the standard.

Over time the standard base grows.

After I get the compiler to produce the result for a new task - but before celebrating - I run the compiler over all the standard base. And if something got broken while I was working on the most recent task, the test run fails - in one or multiple tests. So, before I celebrate, I have to go back and fix up, until the test run succeeds on all the standard base.

And, of course, the whole test run over the standard base is automated, and the testing results are aggregated - all I have to do is click a commander button.

I will implement a similar testing infrastructure now for the Herschel backend - the code generator.

Here is a digest of things that have taken place in Herschel since last update:

In the front-end:

* Semantic graph of the subject module is externalized (know as symbol file :)

* Modules can now be imported; their semantich graphs (symbol tables) are read in and merged with the semantic graph of the subject module

In the back-end:

* Well, the backend modules of CP2 have been added into Herschel and renamed accordingly

* Just as with front-end, I have decided to preserve the modular and most of procedural decomposition of the code-generator (GC). This strategy has yielded quick results in the front-end, and I am adopting it in the back-end as well. The authors of OP2/CP2 are fantastic engineers, their design seems lean and decomposition decisions sane to me. Besides, one of our values is preserving maximal compatibility and transparency, so not reinventing the wheel wherever possible should serve that value.

* In order to ‘plunge’ into the CG (code generator) of CP2, I have set an almost educational goal: generate machine text for the simple program x := 1, assuming VAR x: LONGINT. This goal would require to make a ‘vertical slice’ of amendments: amend only those procedures (or their sections) that would be required to compile just that program.

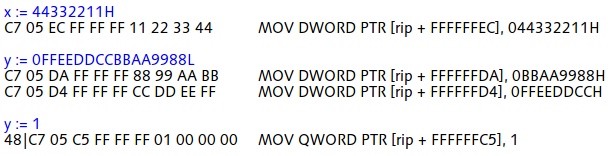

* As of today, the educational goal has been reached. Here is the result:

As you can see, the target program has been somewhat extended; it assumes VAR x: INTEGER; y: LONGINT.

x := ... translates into one move, MOV imm32 -> mem32; because INTEGER is 32-bit, and the constant is encoded into the instruction. This is simple and no different from CP2.

y := FFEEDDCCBBAA9988 translates into two 32-bit moves MOV imm32 -> mem32. This is because the constant doesn’t fit into 32 bits, and thus cannot be encoded directly into the instruction - this is a limitation of amd64. The resulting code is similar to CP2’s output; however, it is produced with a modified flow of command, because the handling of LONGINTs changes in the instruction set and in the code generator.

y := 1 translates into one move, MOV imm32 -> mem64; because LONGINT is 64-bit, 1 is a constant that fits in 32 bits and thus can be encoded into the instruction. The processor performs sign-extension of the imminent constant before sending it to memory over the bus, thus all 64 bits of the target memory operand are properly initialized, despite that the imminent constant is shorter.